Jitter Bug

One of the largest hurdles with the virtualization migration plan was this huge unknown question of whether or not the tinderboxen performing tests could be virtualized.

Now that we have one (somewhat modern) tinderboxen—argo— cloned in a VM and running in physical hardware, we do have some data to look at.

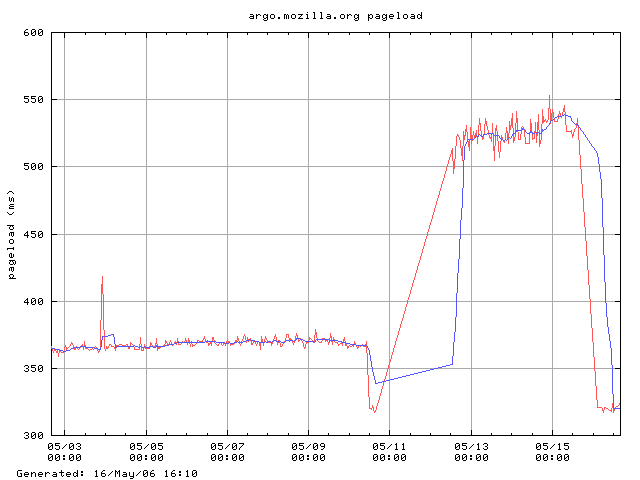

“argo‘s” data is actually a bit confusing, because while the machine instance was cloned, not migrated, the machine’s identity was cloned; that is, “argo” on May 10th was a physical machine; “argo” after May 10th was a virtual machine. And then, “argo” once again became a physical machine on May 16th, with the virtual machine copy appearing on the tinderbox page as “argo-vm.”

On to the graphs!

The Good News

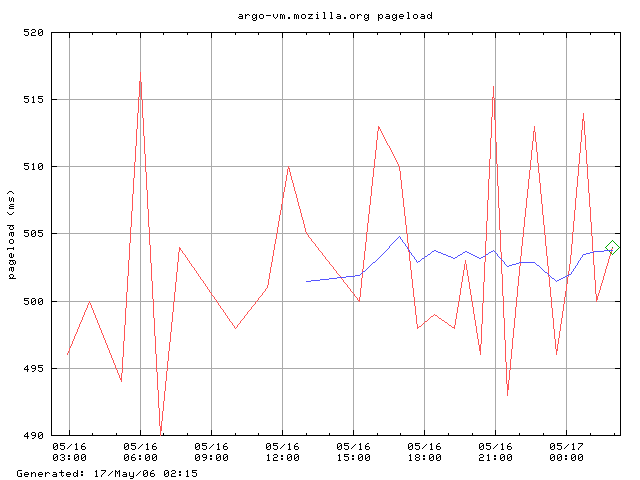

The increase in Tp is expected, due to the virtualization, but the jitter went from about 5 ms to 12-15 ms, according to Stuart‘s initial reading on IRC. It’s interesting that the first set of numbers from the physical argo are smaller than the pre-migration-physical hardware-argo numbers. Did someone check some performance fixing code in?

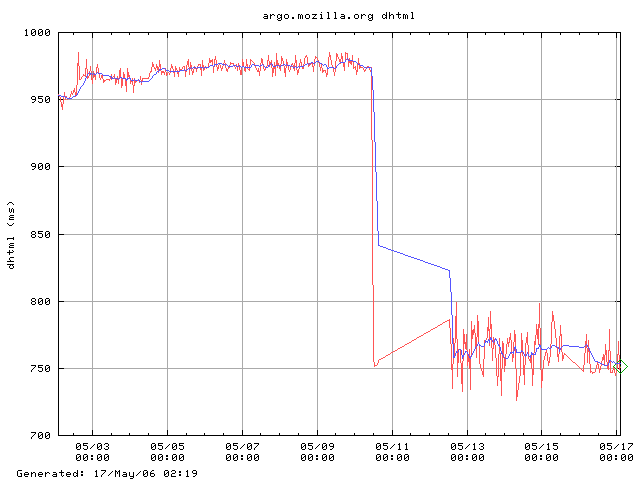

Looks to be about 20 ms jitter in physical vs. about 50 ms in virtual.

The Not-As-Good News

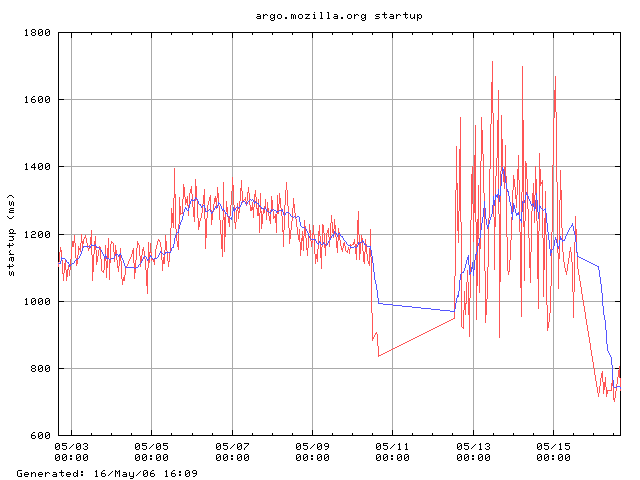

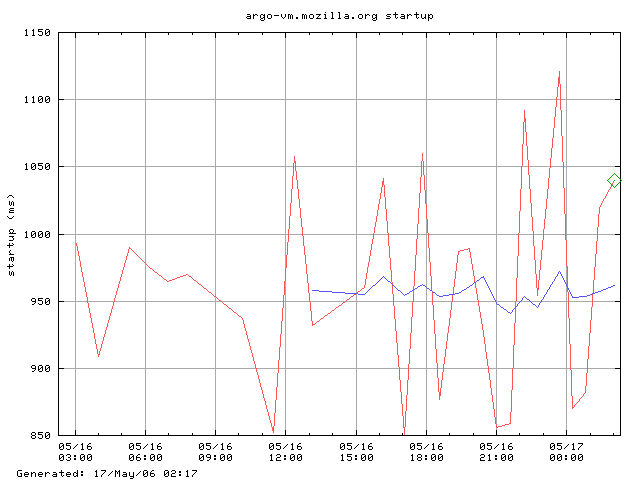

You can really see the (obviously unacceptable) jitter here. Physical jitter looks to be about 50 ms, while virtualized jitter is an order of magnitude larger. The virtualized jitter on Ts below, with the minimum service levels, seems somewhat better.

The Encouraging News

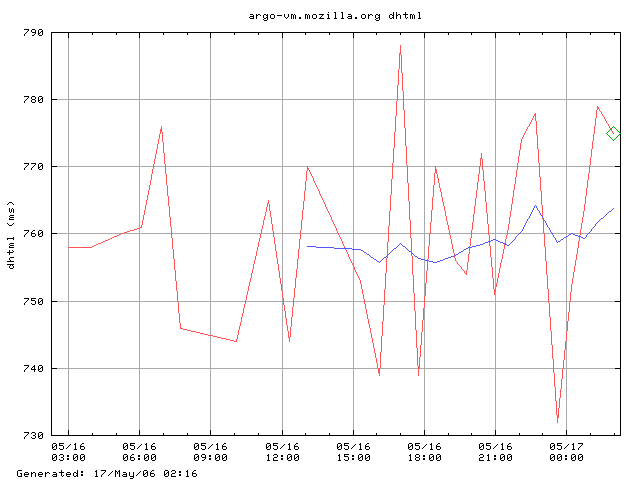

The smaller jitter is still around 10 ms, a slight improvement, but the outlying jitter is less encouraging (25 ms at the beginning and 15 ms on the right).

The Ts jitter still looks pretty bad, but it’s down to over half, to about 200 ms.

At its worst, we dropped about 10 ms of Tdhtml jitter in the virtualized case. There’s definitely room for improvement here.

Stay tuned!

The upshot: the jitter was much lower than I expected for Tp, but much higher than I would’ve expected for Ts, especially given Tp’s jitter.

This data was from the VM before it was setup to have a guaranteed minimum scheduling level, as provided by the virtualization layer. It’s currently set at a minimum of 33% of the (V)CPU and a maximum of 100% of the (V)CPU, along with some network priority settings.

If this doesn’t bring the jitter down, there are some other tricks I plan on trying, including constraining the scheduling level more (so, a window of 33% to 50%, or even a smaller window of 33% to 33%), and turning off VMware’s Guest Tools’ time synchronization, which has a tendancy to clobber the clock at unknown intervals. Normally this is done in such a way that it’s not noticed, but when using high-resolution timing, that method of syncing the clock could produce odd results.

Overall, I’m pretty encouraged. I thought the Tp times were relatively good, given the VM had no custom configuration settings. I’m actually surprised that the Ts data is so much more skewed than the Tp data, which relies on more resources (network, mostly).

We’ll be getting more data on physical vs. virtualized performance testing as the last set of performance tinderboxen get migrated, so a clearer solution should begin to present itself once we have and sift through that data.